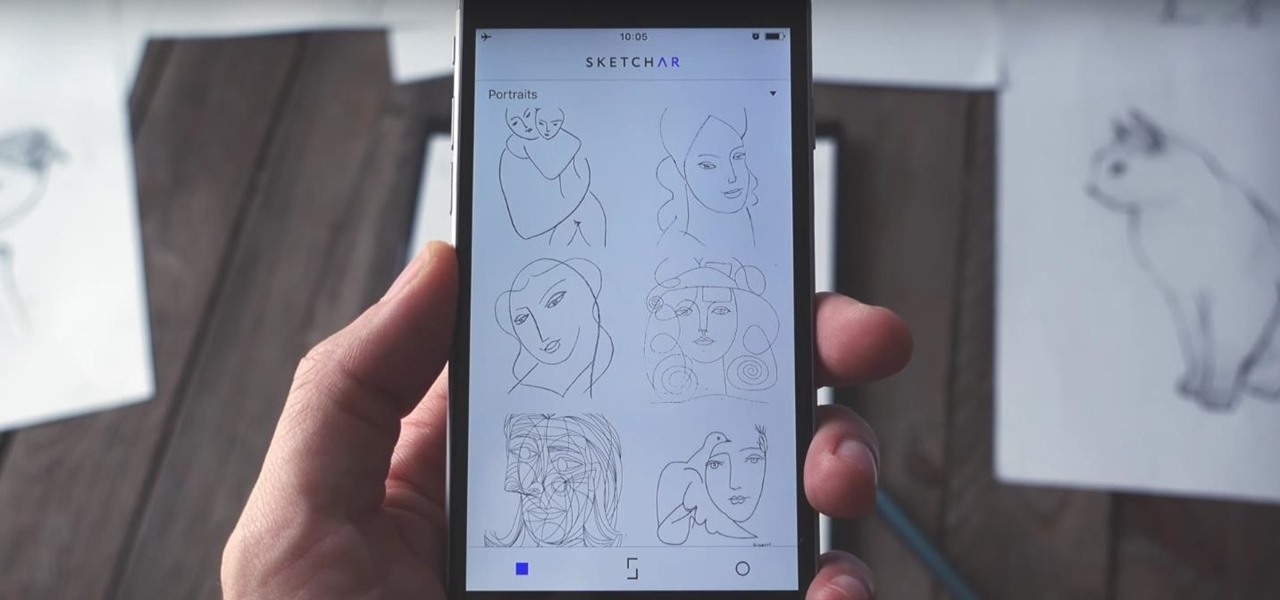

Augmented Reality News How-Tos

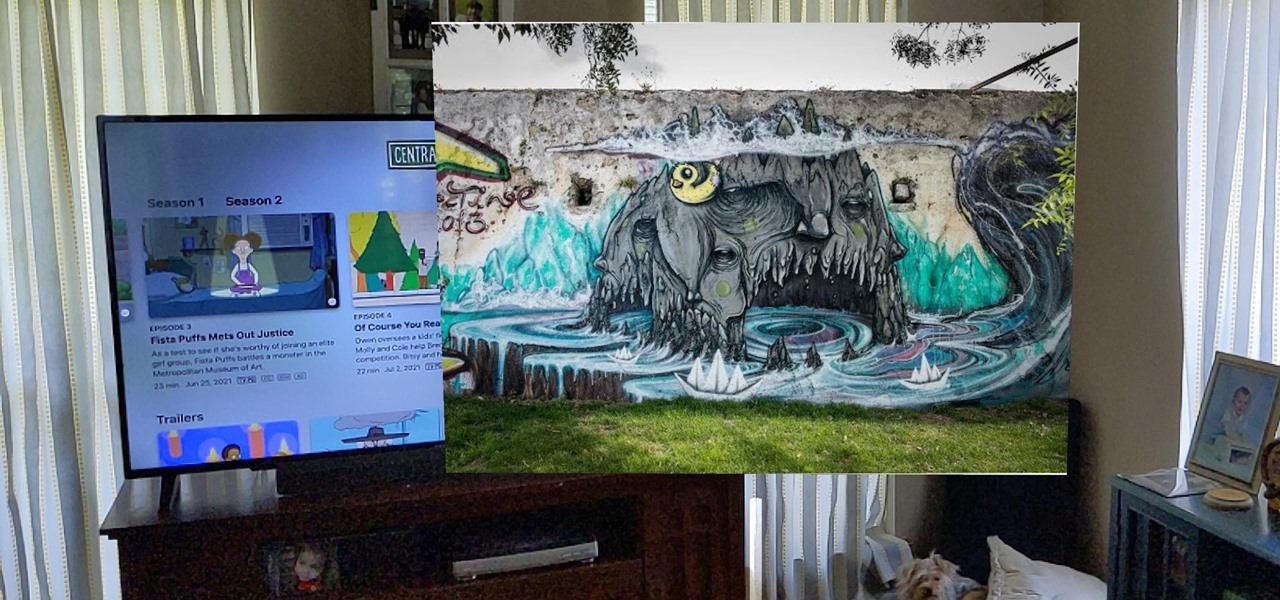

How To: View Art from Your Chromecast in Augmented Reality

The Chromecast TV streaming lineup from Google is one of the more popular products in the category, primarily due to its low price tag and broad app support.

How To: Film Your Own AR Music Videos with Vidiyo, Lego's TikTok Competitor

For its latest take on augmented reality-infused playsets, Lego is giving the young, and the simply young at heart, its twist on the viral lipsync format made popular by TikTok with Lego Vidiyo.

ARKit 101: How to Place a Virtual Television & Play a Video on It in Augmented Reality

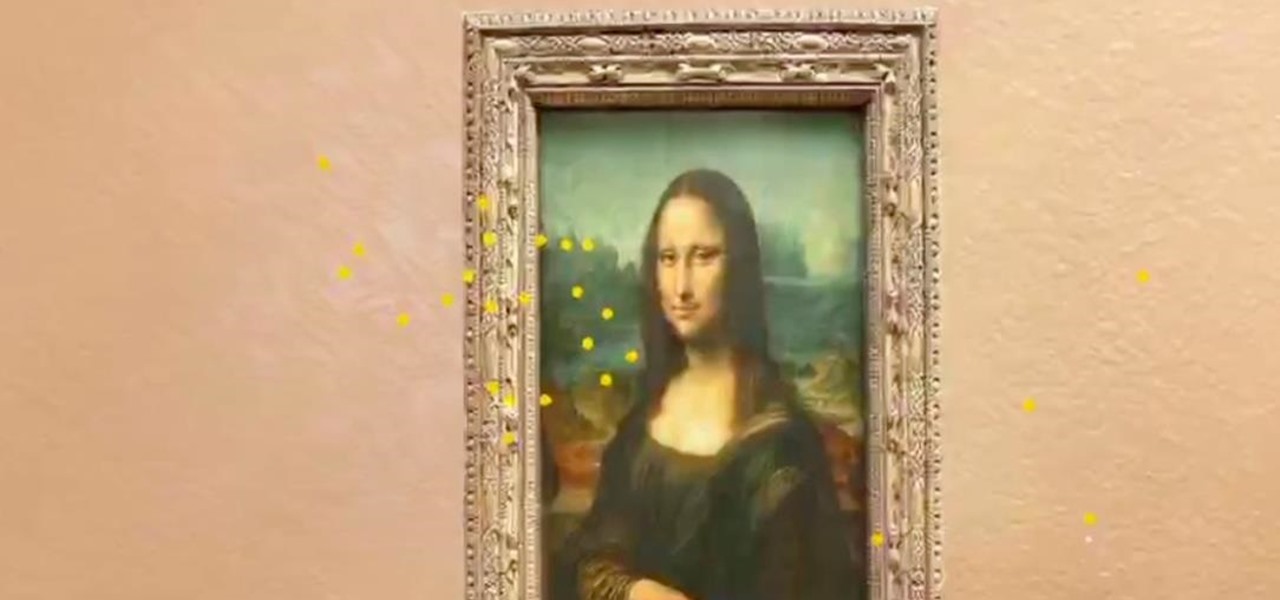

In a previous tutorial, we were able to place the Mona Lisa on vertical surfaces such as walls, books, and monitors using ARKit 1.5. By combining the power of Scene Kit and Sprite Kit (Apple's 2D graphics engine), we can play a video on a flat surface in ARKit.

ARKit 101: How to Place 2D Images, Like a Painting or Photo, on a Wall in Augmented Reality

In a previous tutorial, we were able to measure vertical surfaces such as walls, books, and monitors using ARKit 1.5. With the advent of vertical plane anchors, we can now also attach objects onto these vertical walls.

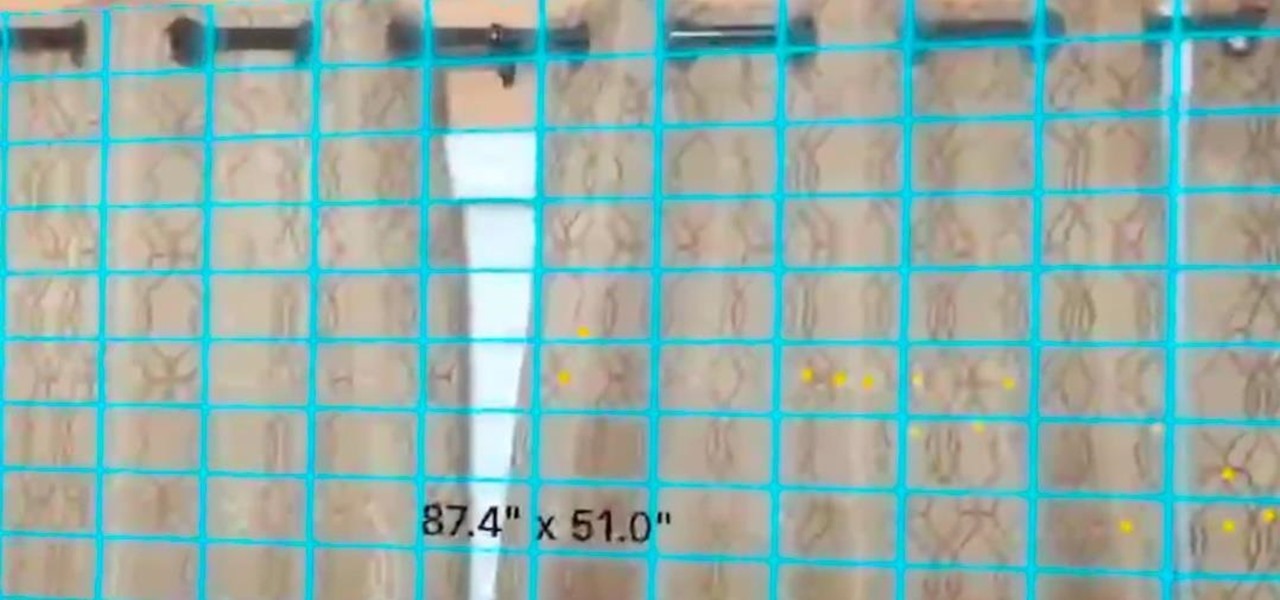

ARKit 101: How to Detect & Measure Vertical Planes with ARKit 1.5

In a previous tutorial, we were able to measure horizontal surfaces such as the ground, tables, etc., all using ARKit. With ARKit 1.5, we're now able to measure vertical surfaces like walls!

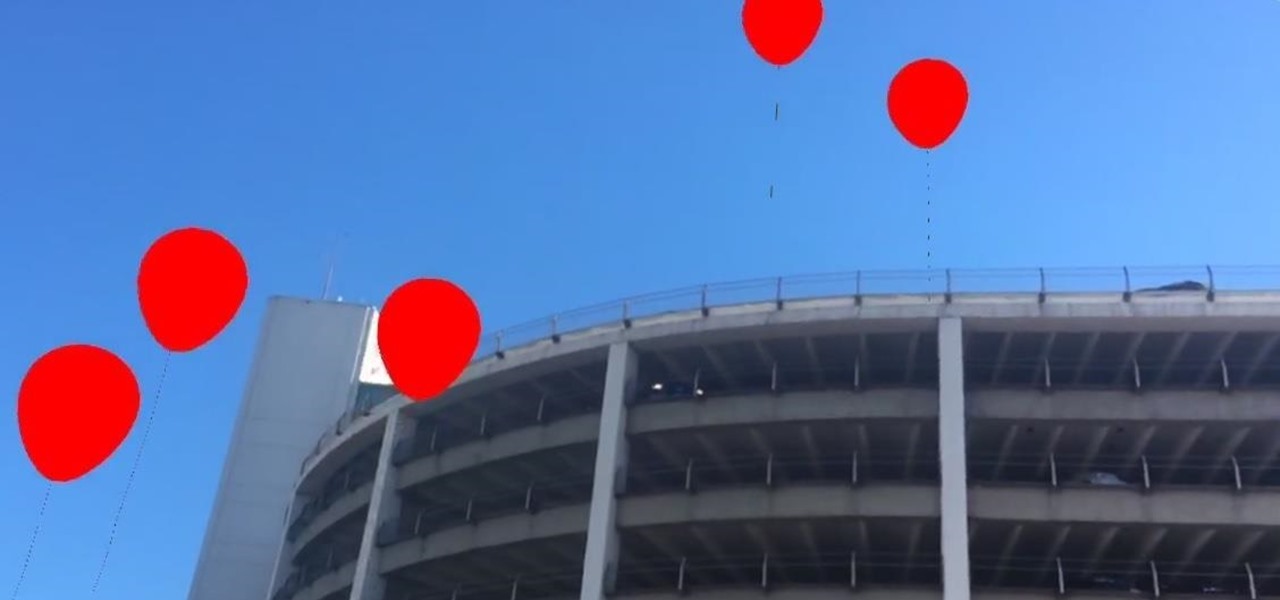

ARKit 101: How to Place a Group of Balloons Around You & Have Them Float Randomly Up into the Sky

Have you ever seen pictures or videos of balloons being let go into the sky and randomly floating away in all directions? It's something you often see in classic posters or movies. Well, guess what? Now you'll be able to do that without having to buy hundreds of balloons, all you'll need is ARKit!

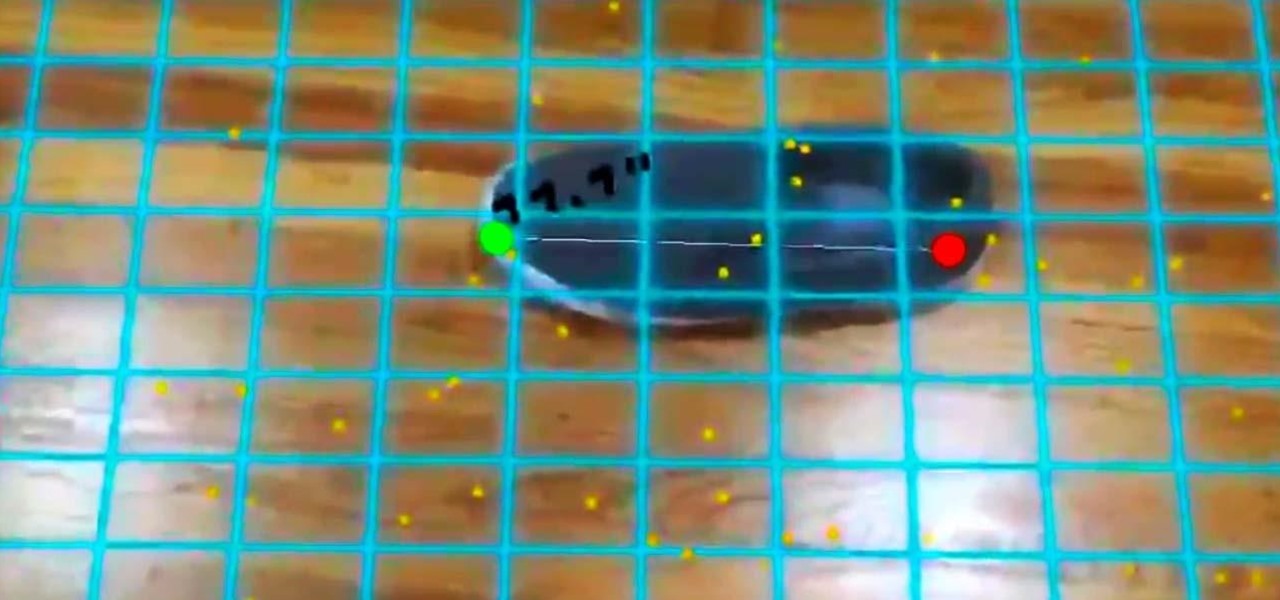

ARKit 101: How to Measure Distance Between Two Points on a Horizontal Plane in Augmented Reality

In our last ARKit tutorial, we learned how to measure the sizes of horizontal planes. It was a helpful entryway into the arena of determining spatial relationships with real world spaces versus virtual objects and experiences.

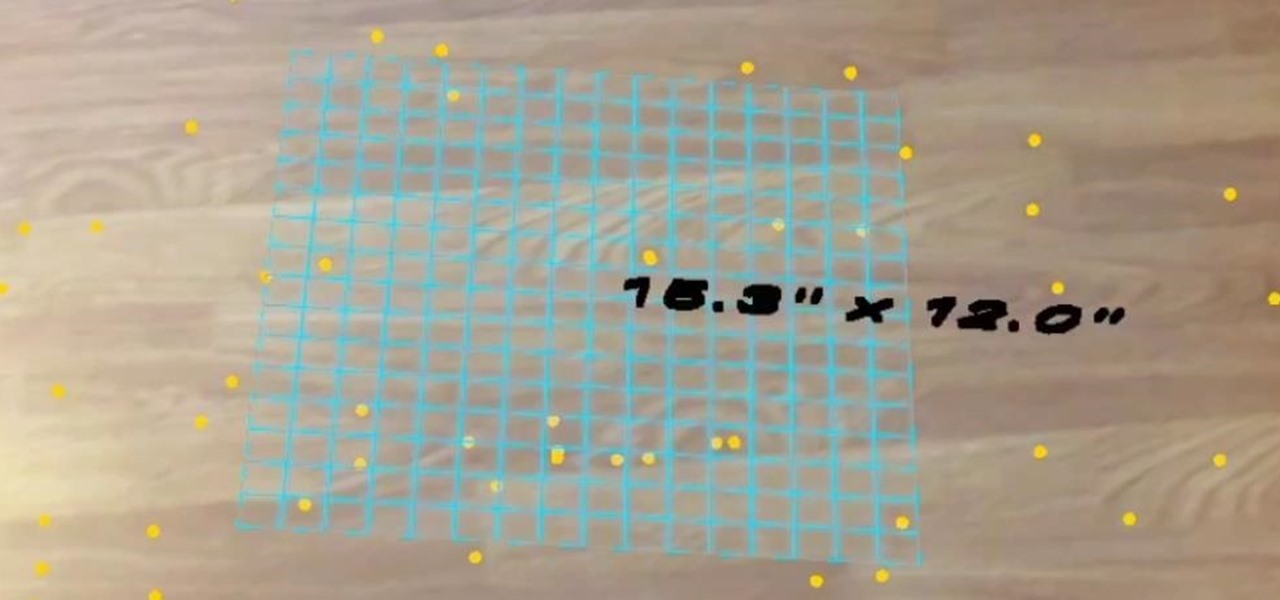

ARKit 101: How to Measure the Ground Using ARKit

Have you noticed the many utility ARKit apps on the App Store that allow you to measure the sizes of horizontal planes in the world? Guess what? After this tutorial, you'll be able to do this yourself!

ARKit 101: How to Launch Your Own Augmented Reality Rocket into the Real World Skies

Have you been noticing SpaceX and its launches lately? Ever imagined how it would feel to launch your own rocket into the sky? Well, imagine no longer!

ARKit 101: How to Place Grass on the Ground Using Plane Detection

Ever notice how some augmented reality apps can pin specific 3D objects on the ground? Many AR games and apps can accurately plant various 3D characters and objects on the ground in such a way that, when we look down upon them, the objects appear to be entirely pinned to the ground in the real world. If we move our smartphone around and come back to those spots, they're still there.

ARKit 101: How to Pilot Your 3D Plane to a Location Using 'hitTest' in ARKit

Hello, budding augmented reality developers! My name is Ambuj, and I'll be introducing all of you Next Reality readers to the world ARKit, as I'm developing an ARKit 101 series on using ARKit to create augmented reality apps for iPad and iPhone. My background is in software engineering, and I've been working on iOS apps for the past three years.

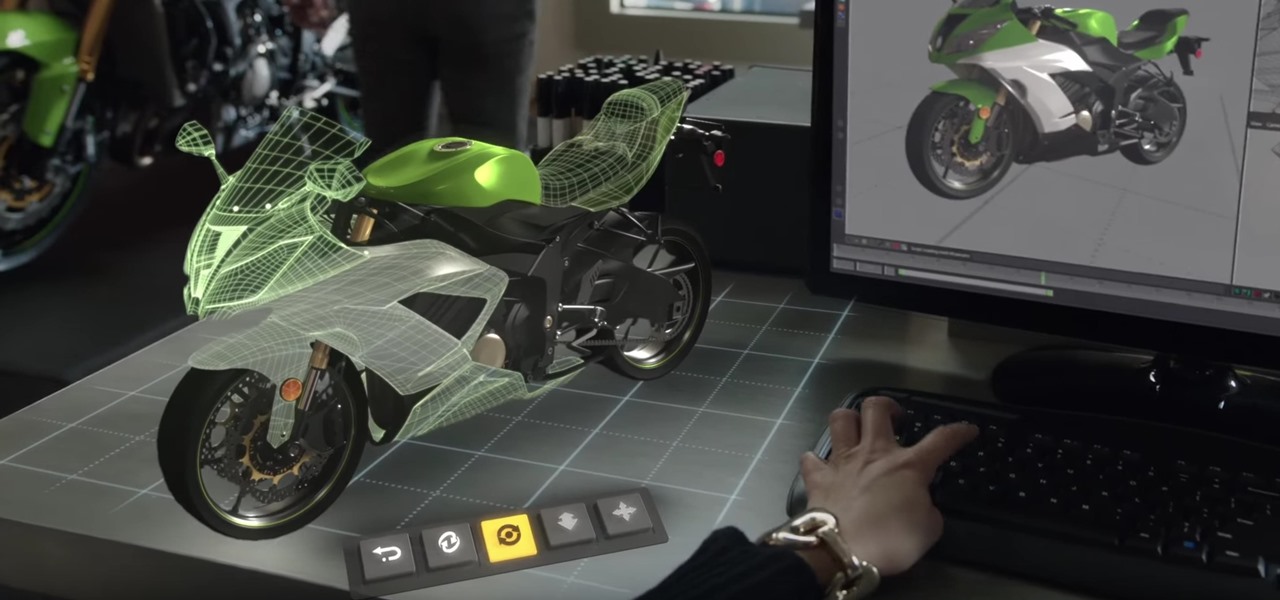

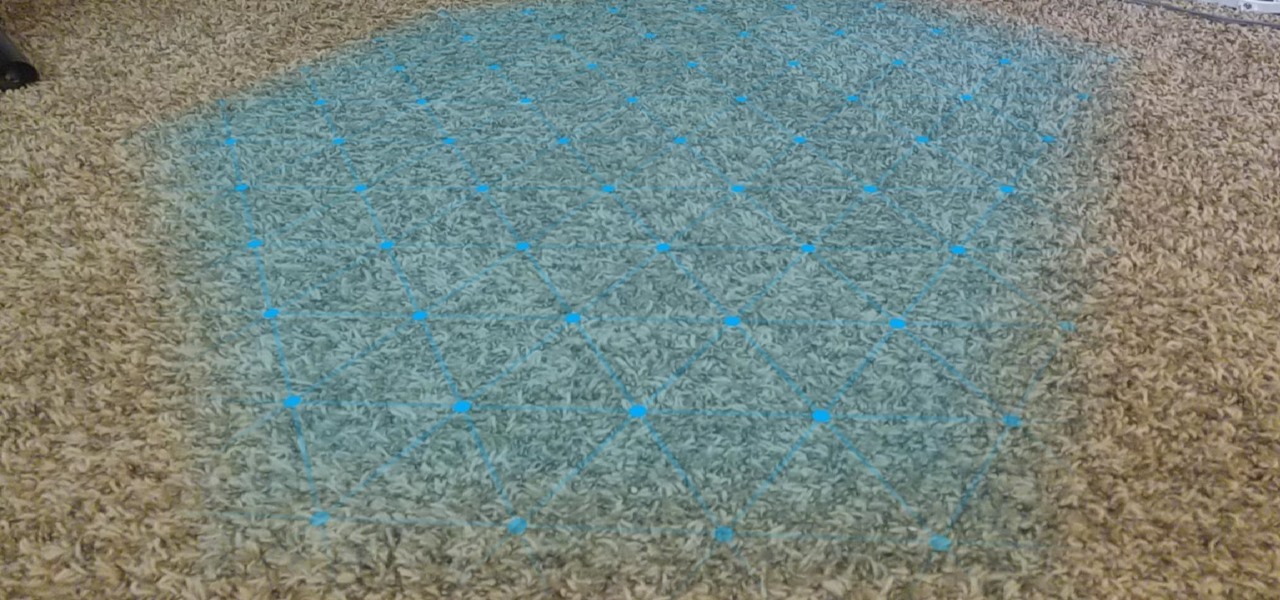

ARCore 101: How to Create a Mobile AR Application in Unity, Part 4 (Enabling Surface Detection)

One of the primary factors that separates an augmented reality device from a standard heads-up display such as Google Glass is dimensional depth perception. This can be created by either RGB cameras, infrared depth cameras, or both, depending on the level of accuracy you're aiming for.

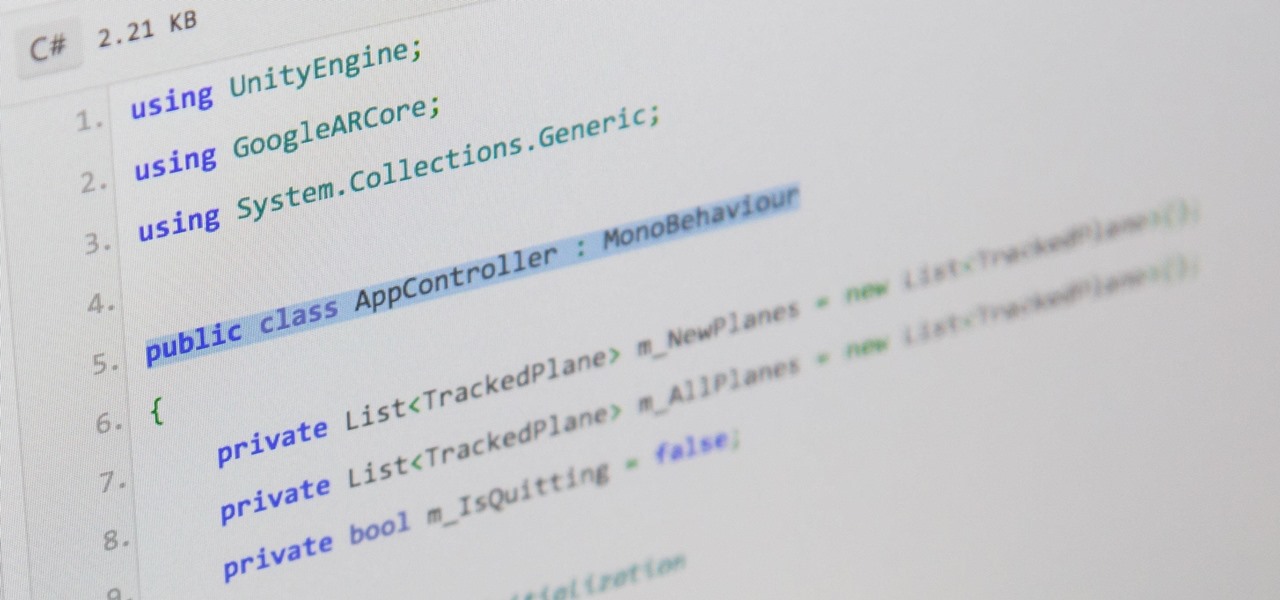

ARCore 101: How to Create a Mobile AR Application in Unity, Part 3 (Setting Up the App Controller)

There are hundreds, if not thousands, of programming languages and variations of those languages that exist. Currently, in the augmented reality space, it seems the Microsoft-created C# has won out as the overall top language of choice. While there are other options like JavaScript and C++, to name a few, C# seems to be the most worthwhile place to invest one's time and effort.

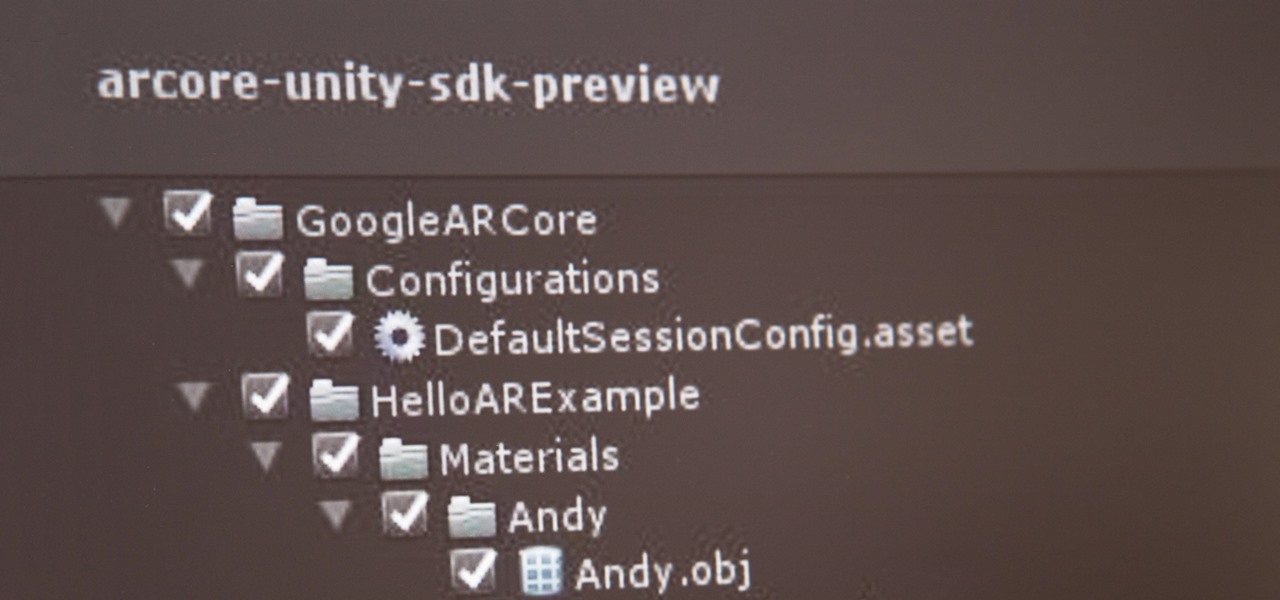

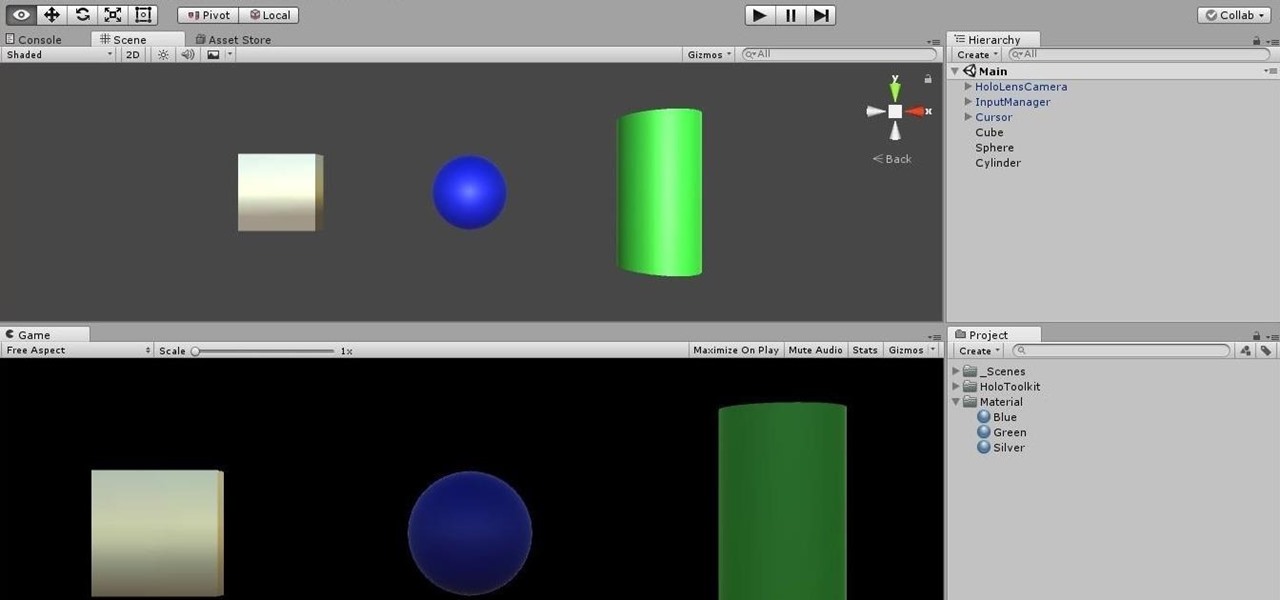

ARCore 101: How to Create a Mobile AR Application in Unity, Part 2 (Setting Up the Framework)

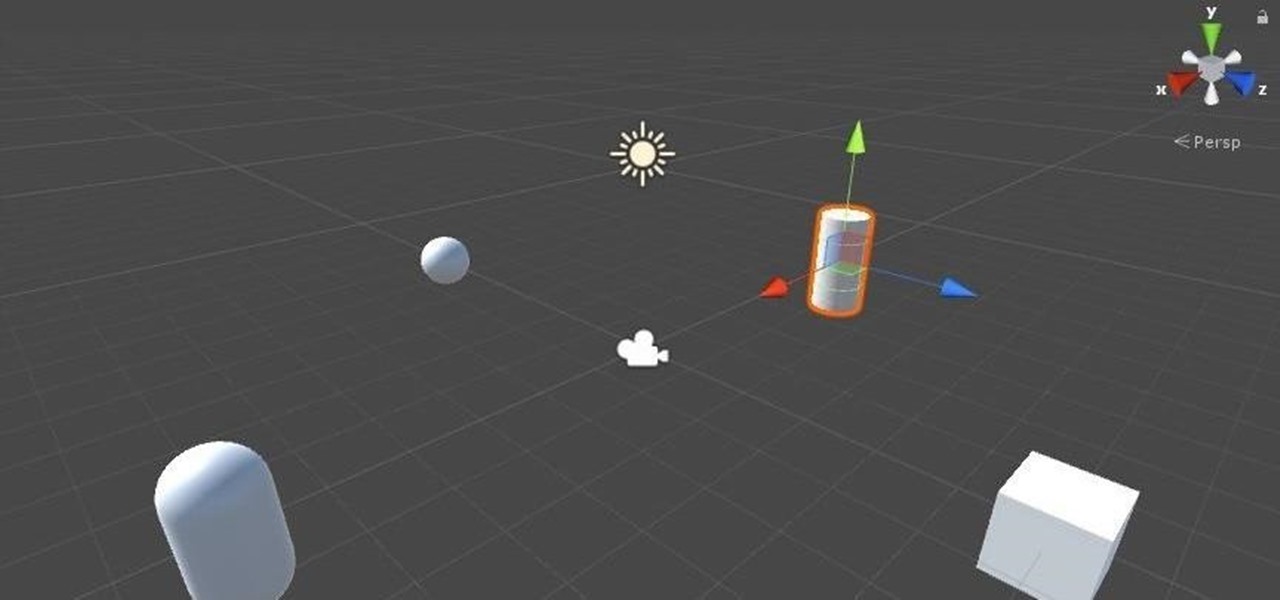

With the software installation out of the way, it's time to build the framework within which to work when building an augmented reality app for Android devices.

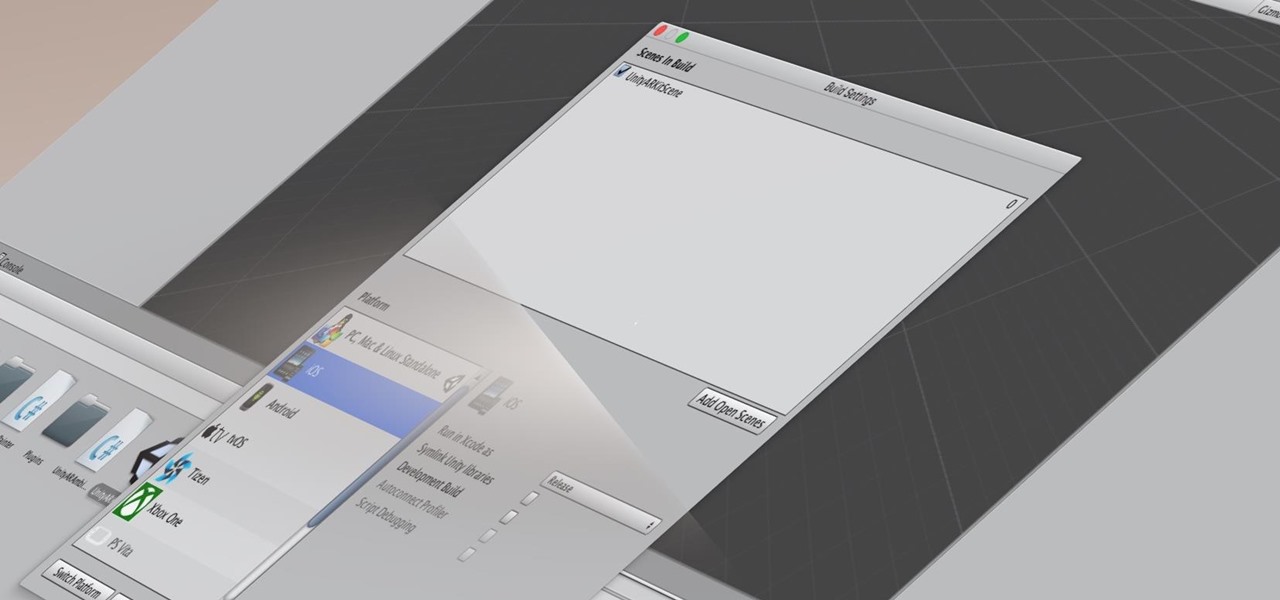

ARCore 101: How to Create a Mobile AR Application in Unity, Part 1 (Setting Up the Software)

If you've contemplated what's possible with augmented reality on mobile devices, and your interest has been piqued enough to start building your own Android-based AR app, then this is a great place to to acquire the basic beginner skills to complete it. Once we get everything installed, we'll create a simple project that allows us to detect surfaces and place custom objects on those surfaces.

ARCore 101: How to Create a Mobile AR Application in Unity

Now that ARCore is out of its developer preview, it's time to get cracking on building augmented reality apps for the supported selection of Android phones available. Since Google's ARCore 1.0 is fairly new, there's not a lot of information out there for developers yet — but we're about to alleviate that.

HoloLens Dev 101: Building a Dynamic User Interface, Part 11 (Rotating Objects)

Continuing our series on building a dynamic user interface for the HoloLens, this guide will show how to rotate the objects that we already created and moved and scaled in previous lessons.

ARKit 101: Creating Simple Interactions in Augmented Reality for the iPhone & iPad

As a developer, before you can make augmented-reality robots that move around in the real world, controlled by a user's finger, you first need to learn how to harness the basics of designing AR software for a touchscreen interface.

HoloLens Dev 101: Building a Dynamic User Interface, Part 10 (Scaling Objects)

An incorrectly scaled object in your HoloLens app can make or break your project, so it's important to get scaling in Unity down, such as working with uniform and non-uniform factors, before moving onto to other aspects of your app.

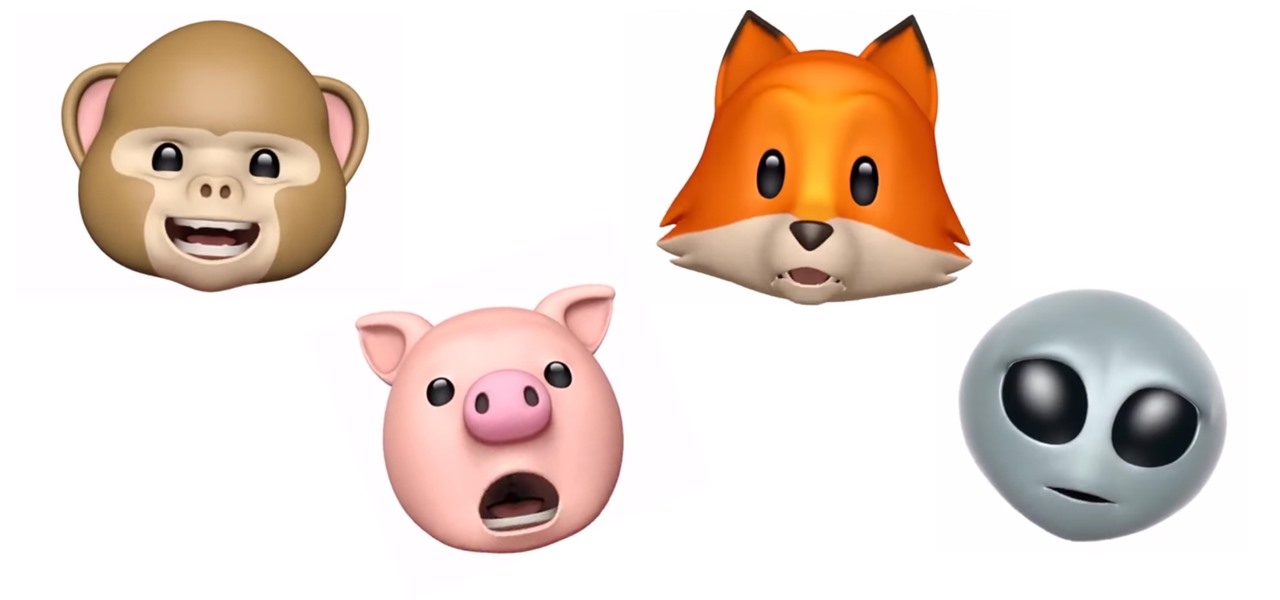

How To: Make Your Own Animoji Karaoke Video

Introduced along with the iPhone X, Animoji are animated characters, mostly animals, that are rendered from the user's facial expressions using the device's TrueDepth camera system to track the user's facial movements.

How To: Set Up Your Phone with the Lenovo Mirage AR Headset for 'Star Wars: Jedi Challenges'

Just in time for the holiday season, Lenovo has released its Mirage AR head-mounted display with the Star Wars: Jedi Challenges game and accessories. Unfortunately, while its price point is a fraction of most other AR headsets, at the moment, it does have a few issues with the setup process.

HoloLens Dev 101: Building a Dynamic User Interface, Part 9 (Moving Objects)

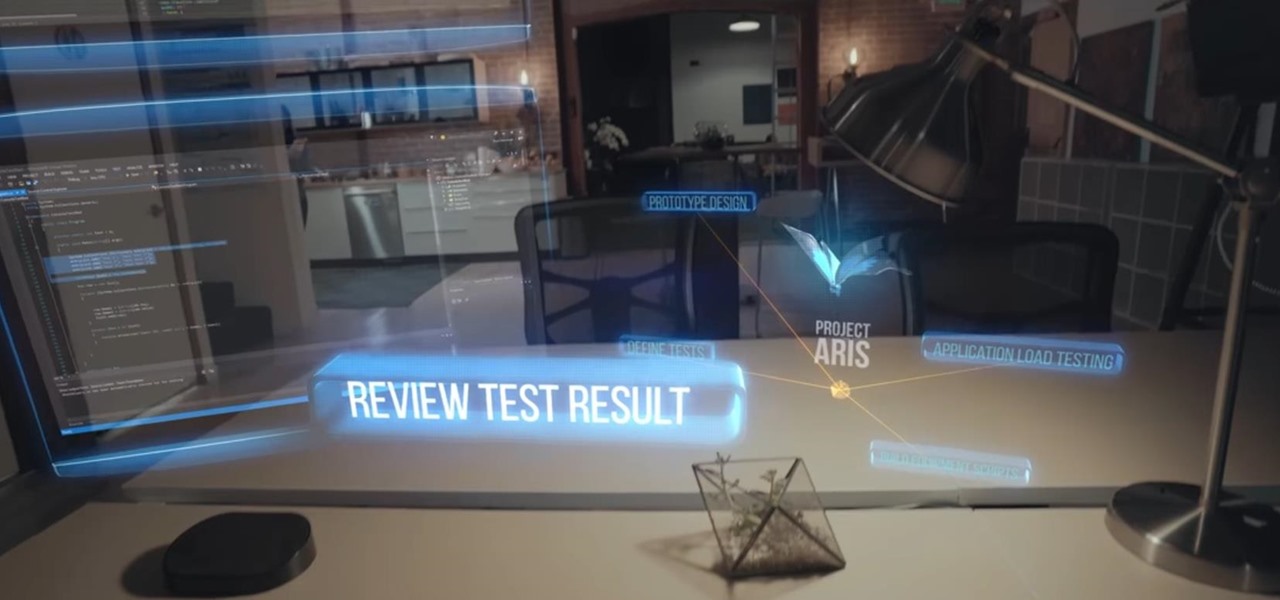

So after setting everything up, creating the system, working with focus and gaze, creating our bounding box and UI elements, unlocking the menu movement, as well as jumping through hoops refactoring a few parts of the system itself, we have finally made it to the point in our series on dynamic user interfaces for HoloLens where we get some real interaction.

How To: Set Up the Meta 2 Head-Mounted Display

So after being teased last Christmas with an email promising that the Meta 2 was shipping, nearly a year later, we finally have one of the units that we ordered. Without a moment's hesitation, I tore the package open, set the device up, and started working with it.

How To: Set Up Windows Mixed Reality Motion Controllers

This is a very exciting time for mixed reality developers and fans alike. In 2017, we have seen a constant stream of new hardware and software releases hitting the virtual shelves. And while most of them have been in the form of developer kits, they bring with them hope and the potential promise of amazing things in the future.

HoloLens Dev 101: Building a Dynamic User Interface, Part 2 (The System Manager)

Now that we have installed the toolkit, set up our prefabs, and prepared Unity for export to HoloLens, we can proceed with the fun stuff involved in building a dynamic user interface. In this section, we will build the system manager.

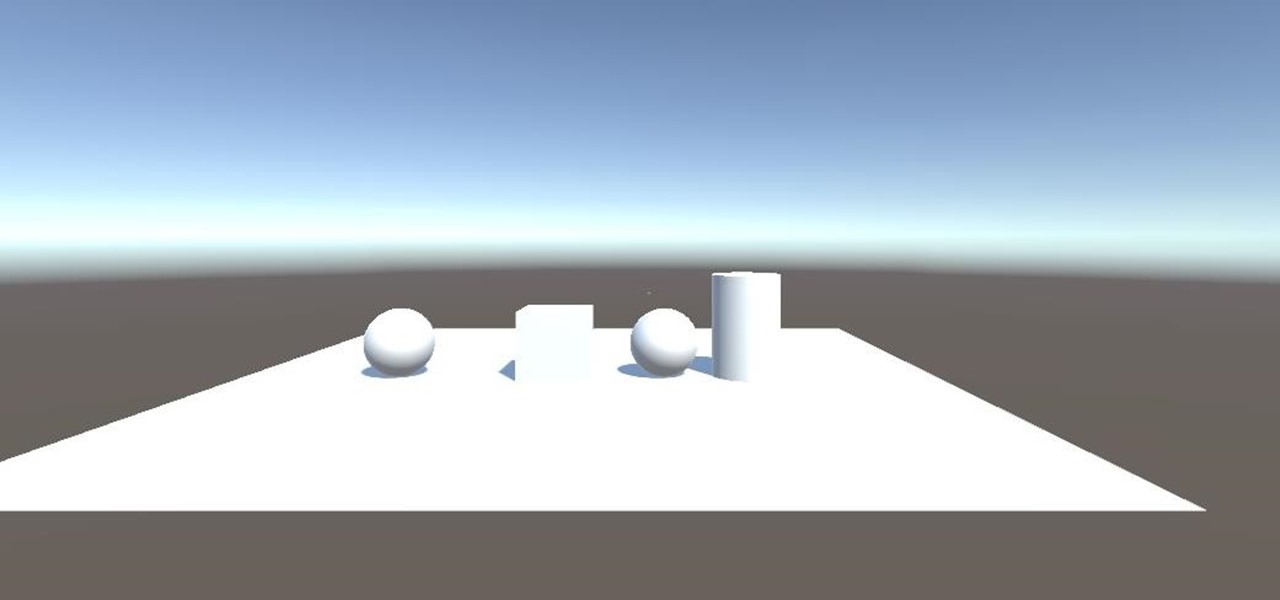

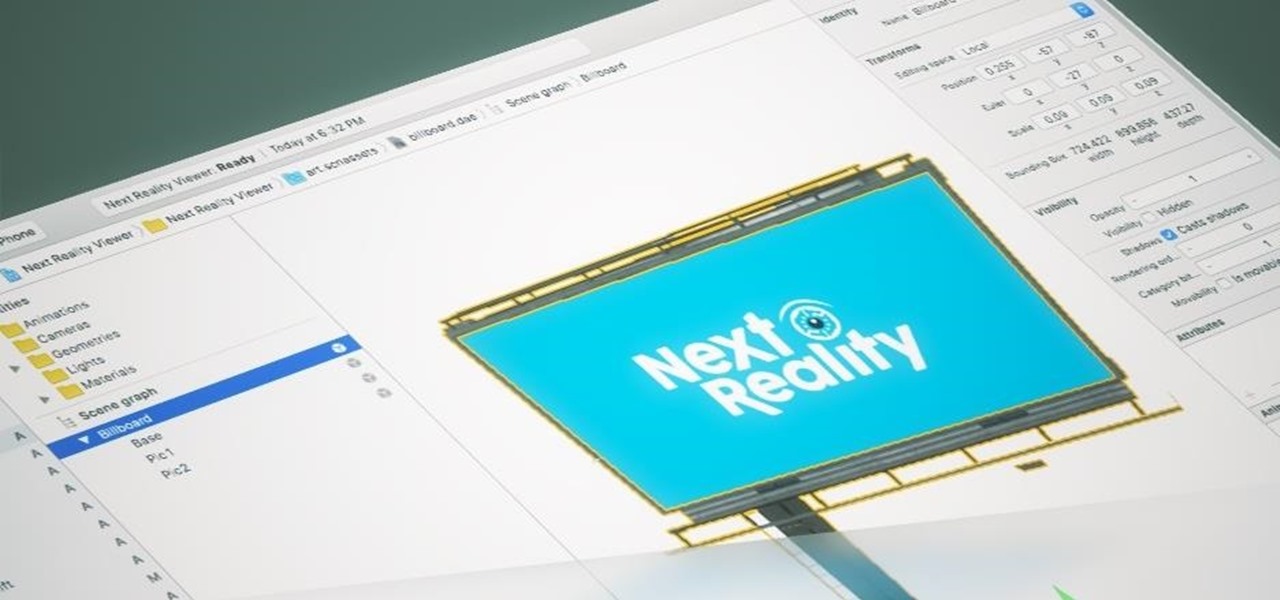

ARKit 101: Using the Unity ARKit Plugin to Create Apps for the iPhone & iPad

Many developers, myself included, use Unity for 3D application development as well as making games. There are many that mistakenly believe Unity to be a game engine. And that, of course, is how it started. But we now live in a world where our applications have a new level of depth.

ARKit 101: Get Started Building an Augmented Reality Application on an iPhone or iPad Quickly

The reveal of Apple's new ARKit extensions for iPhones and iPads, while not much of a shock, did bring with it one big surprise. By finding a solution to surface detection without the use of additional external sensors, Apple just took a big step over many — though not all — solutions and platforms currently available for mobile AR.

HoloLens Dev 101: How to Get Started with Unity's New Video Player

The release of Unity 5.6 brought with it several great enhancements. One of those enhancements is the new Video Player component. This addition allows for adding videos to your scenes quickly and with plenty of flexibility. Whether you are looking to simply add a video to a plane, or get creative and build a world layered with videos on 3D objects, Unity 5.6 has your back.

HoloLens Dev 101: How to Use Coroutines to Add Effects to Holograms

Being part of the wild frontier is amazing. It doesn't take much to blow minds of first time mixed reality users — merely placing a canned hologram in the room is enough. However, once that childlike wonder fades, we need to add more substance to create lasting impressions.

HoloLens Dev 101: How to Explore the Power of Spatial Audio Using Hotspots

When making a convincing mixed reality experience, audio consideration is a must. Great audio can transport the HoloLens wearer to another place or time, help navigate 3D interfaces, or blur the lines of what is real and what is a hologram. Using a location-based trigger (hotspot), we will dial up a fun example of how well spatial sound works with the HoloLens.

HoloLens Dev 101: How to Create User Location Hotspots to Trigger Events with the HoloLens

One of the truly beautiful things about the HoloLens is its completely untethered, the-world-is-your-oyster freedom. This, paired with the ability to view your real surroundings while wearing the device, allows for some incredibly interesting uses. One particular use is triggering events when a user enters a specific location in a physical space. Think of it as a futuristic automatic door.

How To: Get Windows Mixed Reality Before the Creators Update Drops April 11

Soon, users will no longer need an expensive headset or even a smartphone to experience mixed reality. The new Microsoft update will be bringing mixed reality applications to every Windows computer next month. This new upgrade to Windows 10 named the Windows 10 Creators Update.

HoloLens Dev 102: How to Add Holographic Effects to Your Vuforia ImageTarget

Welcome back to this series on making physical objects come to life on HoloLens with Vuforia. Now that we've set up Vuforia and readied our ImageTarget and camera system, we can see our work come to life. Because in the end, is that not one of the main driving forces when developing—that Frankenstein-like sensation of bringing something to life that was not there before?

HoloLens Dev 102: How to Create an ImageTarget with Vuforia & Set Up the Camera System

Now that we've set up Vuforia in Unity, we can work on the more exciting aspects of making physical objects come to life on the HoloLens. In this guide, we will choose an image (something that you physically have in your home), build our ImageTarget database, and then set up our Unity camera to be able to recognize the chosen image so that it can overlay the 3D holographic effect on top of it.

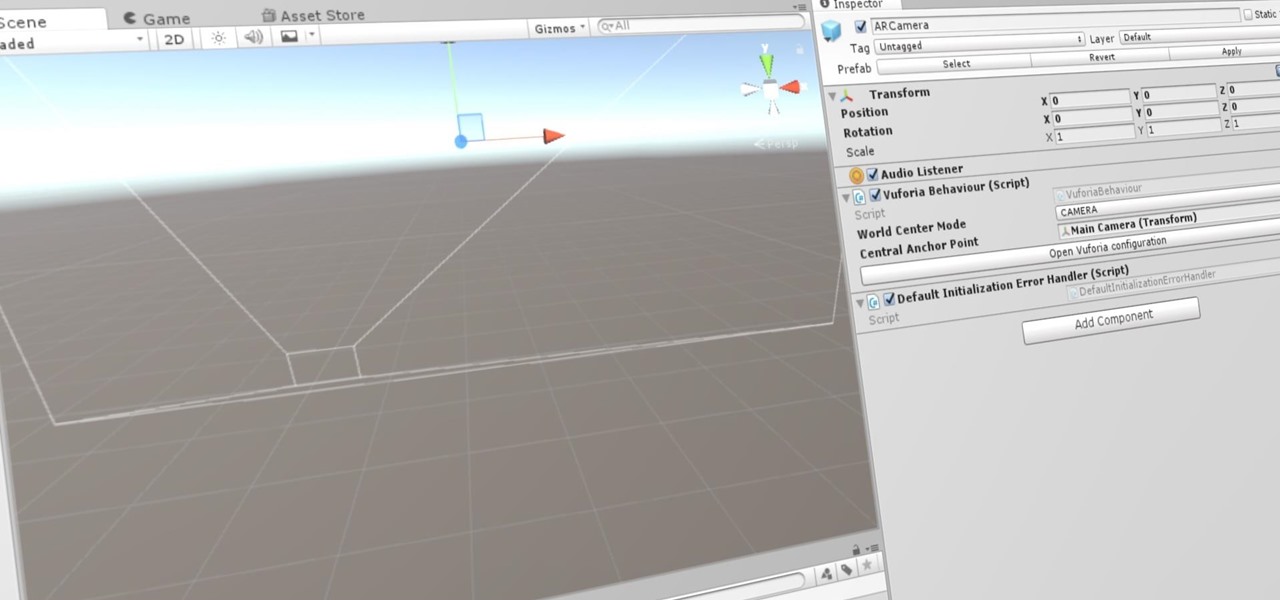

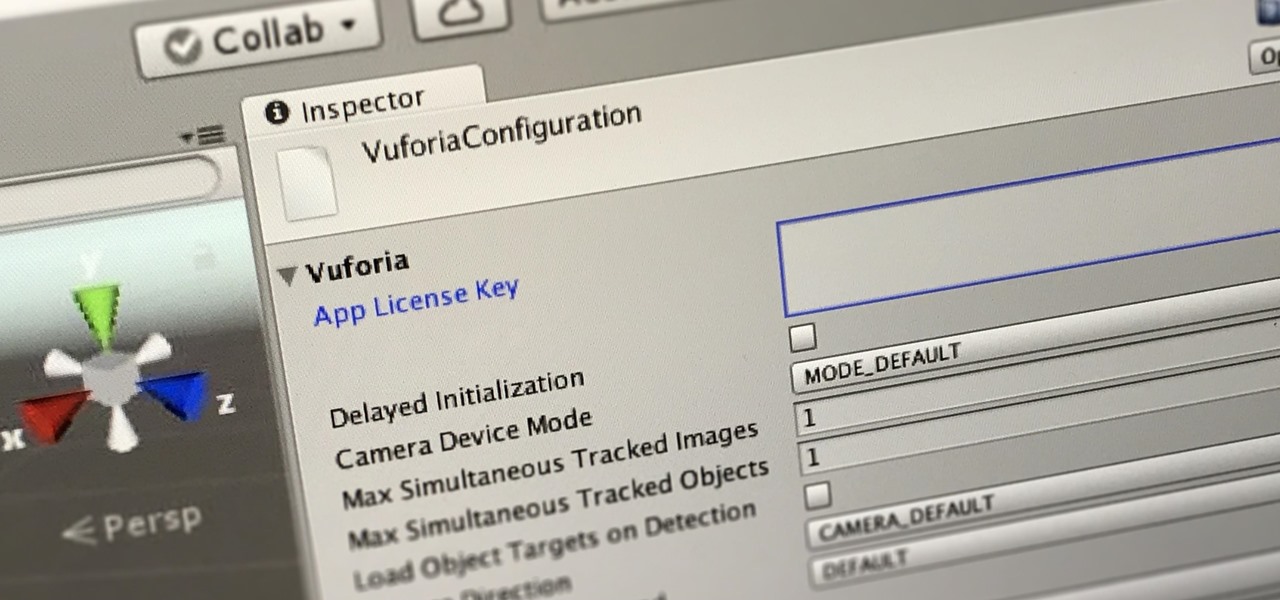

HoloLens Dev 102: How to Install & Set Up Vuforia in Unity

In this first part of our tutorial series on making physical objects come to life on HoloLens, we are going to set up Vuforia in Unity.

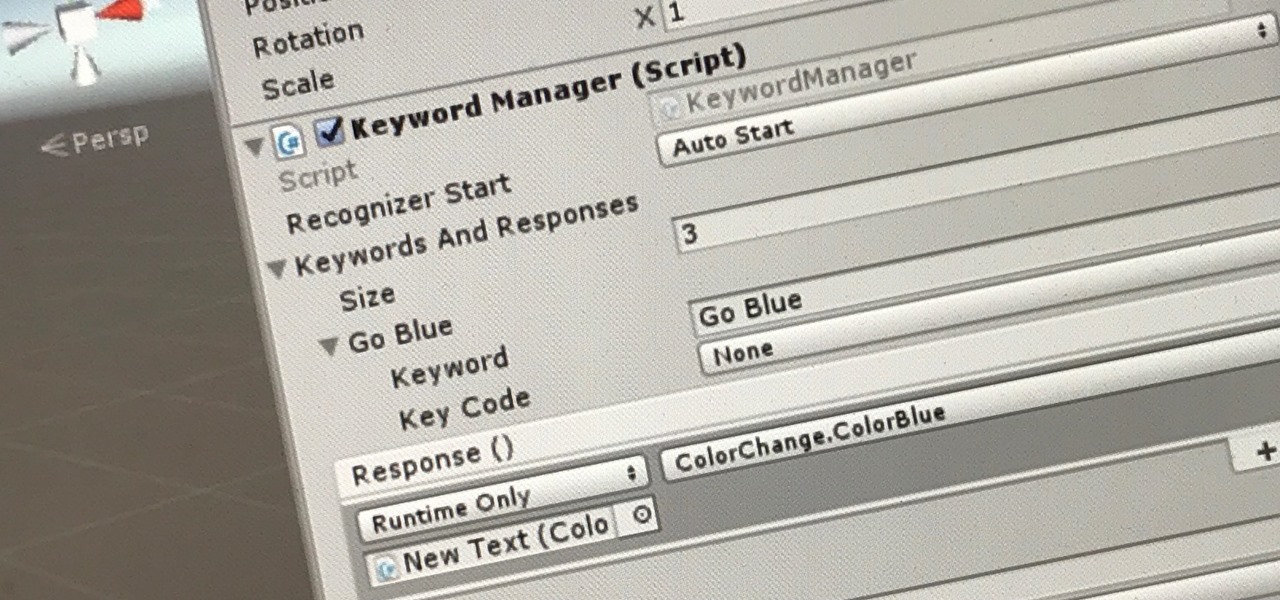

HoloToolkit: How to Add Voice Commands to Your HoloLens App

The HoloToolkit offers a great many, simple ways to add what seems like extremely complex features of the HoloLens, but it can be a bit tricky if you're new to Windows Holographic. So this will be the first in an ongoing series designed to help new developers understand what exactly we can do with the HoloLens, and we'll start with voice commands.

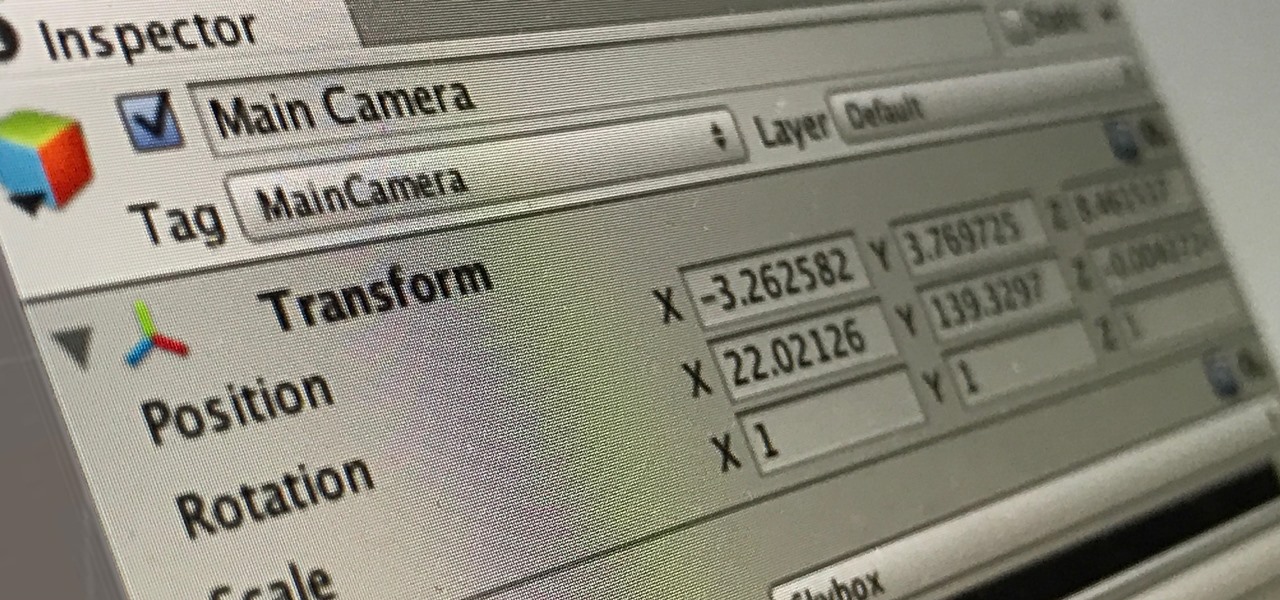

HoloLens Dev 101: The Unity Editor Basics

With any continuously active software, it can start to become fairly complex after a few years of updates. New features and revisions both get layered into a thick mesh of menu systems and controls that even pro users can get bewildered by. If you are new to a certain application after it has been around for many years, it can be downright intimidating to know where to begin.

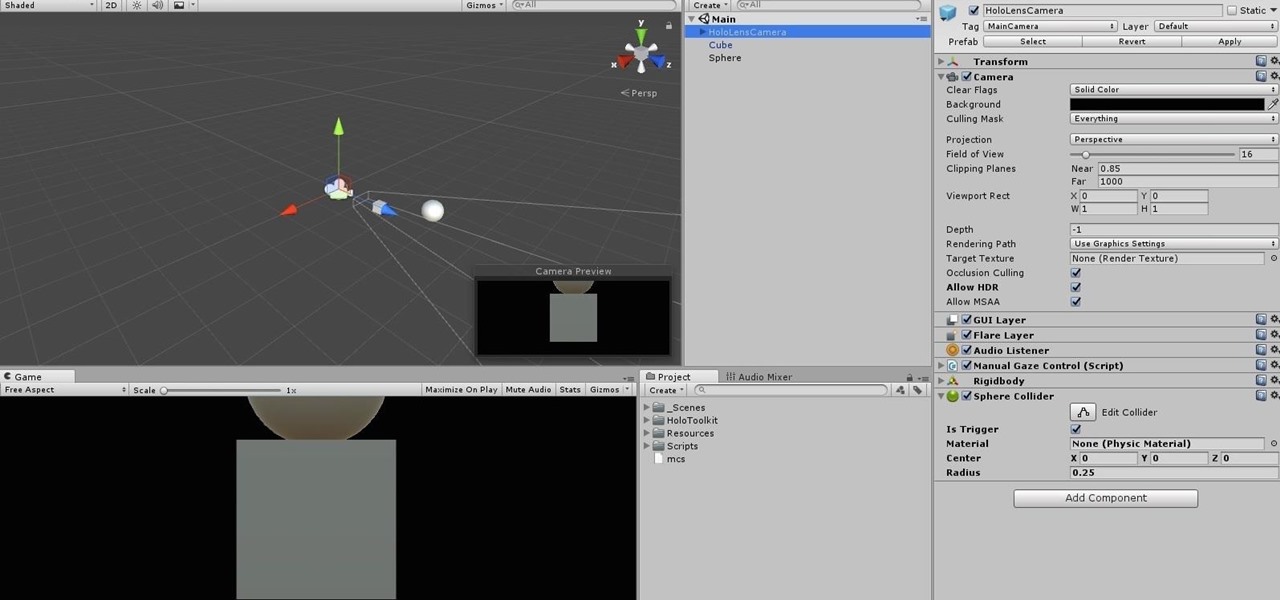

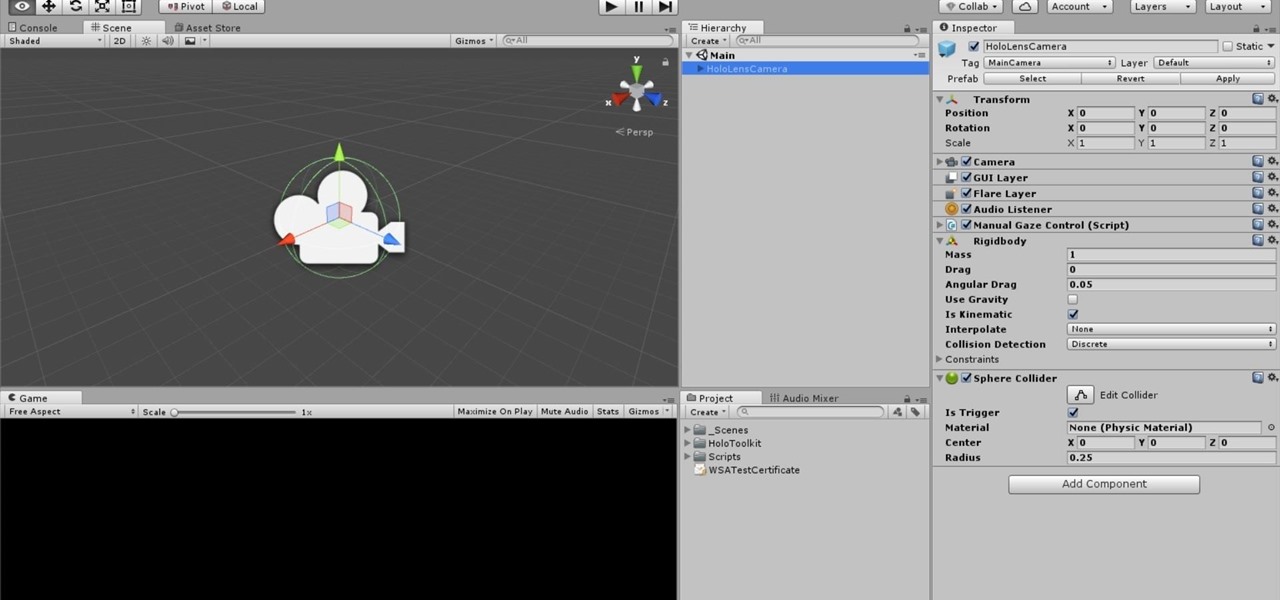

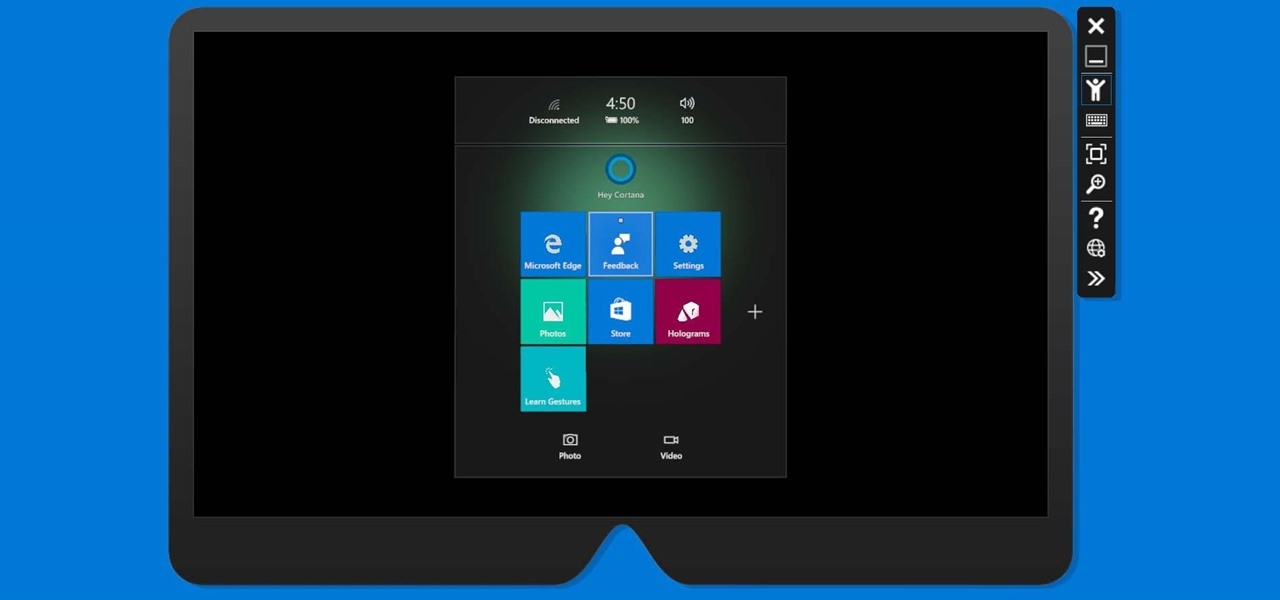

HoloLens Dev 101 : How to Build a Basic HoloLens App in Minutes

Now that we've got all of our software installed, we're going to proceed with the next step in our HoloLens Dev 101 series—starting a fresh project and building it into a Holographic application. Then we will output the application to the HoloLens Emulator so we can see it in action.

HoloLens Dev 101: How to Install & Set Up the HoloLens Emulator

Don't let the lack of owning a HoloLens stop you from joining in on the fun of creating software in this exciting new space. The HoloLens Emulator offers a solution for everyone that wants to explore Windows Holographic development.

HoloLens Dev 101: How to Install & Set Up the Software to Start Developing for Windows Holographic

In this first part of my series on getting started with Windows Holographic, we are going to cover everything you need to get set up for developing HoloLens apps. There are many pieces coming together to make one single application, but once you get used to them all, you won't even notice. Now there are different approaches you can take to make applications for HoloLens, but this way is simply the fastest.